I'm using AI to 'read' my paintings and help me compose music. Here's how I do it.

- Oops!Something went wrong.Please try again later.

- Oops!Something went wrong.Please try again later.

Shane Guffogg is a multi-media abstract artist with synesthesia, meaning he "hears color."

Guffogg worked with AI experts and musicians to compose music that corresponds to his paintings.

He believes AI is still a tool that "needs oversight" but it's enhanced his creative process.

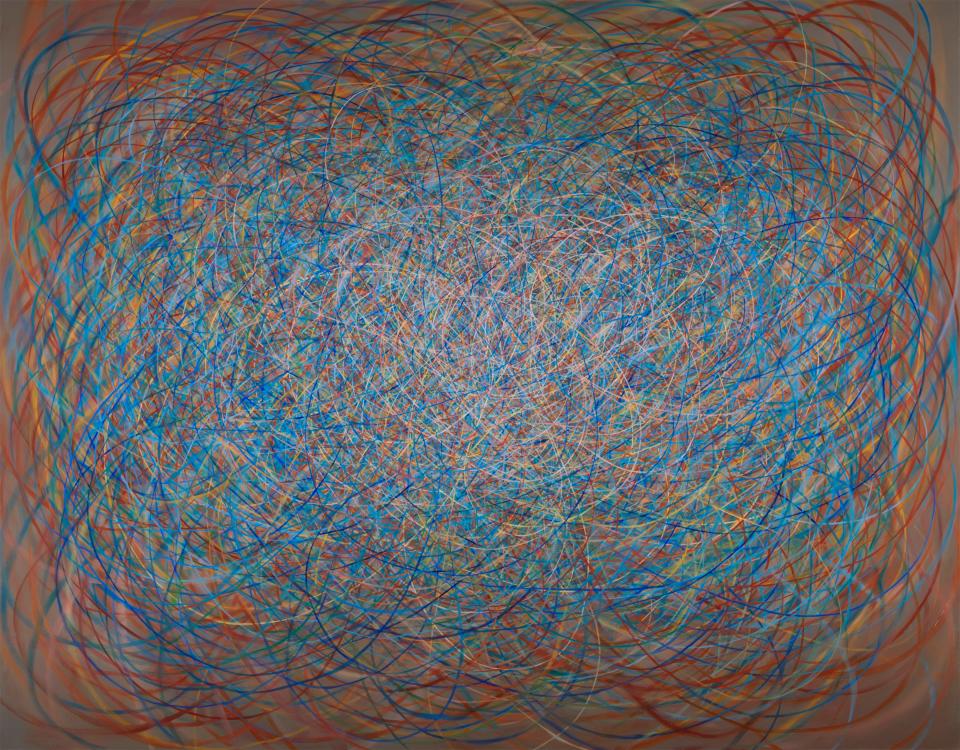

This is an as-told-to conversation with Shane Guffogg, an American artist who launched "At the Still Point of the Turning World – Strangers of Time," an exhibition of 21 paintings at the Venice Biennale earlier this month. This conversation has been condensed and edited for clarity.

I have synesthesia, which means I hear color.

So, what I'm listening to when I paint is important. I listen to Indian classical music, Gregorian chants, and some obscure composers such as György Ligeti, Leo Ornstein, and Terry Riley. The music sparks my creativity and allows me to be completely present and in that moment.

For years, I've been preoccupied with what my paintings might sound like. The AI revolution pushed me to search for experts who could help me. My first point of contact was Radhika Dirks, an AI and quantum computing expert. We had a couple of Zoom sessions, and she told me — to the best of her knowledge — that no AI program could help me. Instead, she suggested I create a visual alphabet that matched the musical chords I heard in my mind to colors.

I thought it could be a way to propel my creativity. It also built upon the idea of an unconscious alphabet that has informed my art throughout my career.

I met with musicians and AI experts to create a visual alphabet

I started by looking for musicians to collaborate with and met Anthony Cardella, a young, incredibly gifted pianist in Los Angeles. He's a Ph.D. student at USC and happened to know — and even play — many obscure composers I listen to when I paint.

We started collaborating. We would sit down and examine my paintings together. I would zoom in on a color in Photoshop, look at it, and sensorially feel the musical note. Then I would tell Anthony. I'd say, for example, I think that's the color of the note B. He'd hit the B, and I'd say, "No, that's not it; try a B sharp?" After a few trials, he'd suddenly hit the right notes. I would know because the colors would begin to vibrate for me. Together, we've charted chords that correspond to 40 colors.

Soon after, I met an AI researcher named Jonah Lynch through mutual contacts. He works at the intersection of the digital humanities and machine learning. I invited him over to my ranch in central California and explained the work I had been doing and how I created my paintings. We had long discussions about art, poetry, and creating an AI algorithm that could be fed the chords.

He developed a program to "read'' my paintings and convert them into music. I gave him the main colors I used in each painting and the chords I hear when I see those colors. Jonah watched videos of me painting, studied the movement of my hands, and wrote software that sampled images of the paintings, following my hand movements, and assigned each color sampled from the paintings to its corresponding chord. Then, he fed this sequence of chords into a neural network that has memorized most of the last 500 years of keyboard music. He prompted the network to "dream" of new sequences based on the color-chord sequences and the history of Western music to create pages of sheet music.

When I heard that music played back to me, it brought tears to my eyes. It was just a rough version of what I heard while painting, but I thought, "There it is."

I took the music back to Anthony, the pianist. Amazingly, I could point to the sheet music and tell him what compositions I was listening to while painting, and he'd say, "Yes, I can see it in the chords." The Indian ragas, the Gregorian chants, the Ligeti, and Ornstein — they were all there.

Still, the music was largely a series of chords at that stage. Anthony said we could have melodies if we rearranged it a bit.

AI is still a tool that needs human oversight.

We composed music for several paintings and have played it for audiences worldwide. We held a concert last month at the Forest Lawn Museum in Los Angeles, where I also had a few paintings in a show. The audience could look at the paintings while Anthony played, which was a profound experience. A couple of people cried.

At the launch of my latest exhibition during the opening week of the Venice Biennale, Anthony played the world premiere of a sonata he composed inspired by my painting, Only Through Time Time is Conquered, to a live audience. After the performance, I talked to several people, and they said they could see where the colors and the notes met on the painting. It was something they had never experienced.

I know many people are very afraid of AI, and I, too, see it as a tool that needs human oversight. It's not a means to an end. Still, it opened up many possibilities and enhanced my creative process. I don't know if I could have unlocked the musicality in my paintings in a real way without it.

Hear the sonata below:

Read the original article on Business Insider